January 16, 2024

Sign of the Times: The Battle Against AI Goes Big

I closed out 2023 by writing about one lawsuit over AI and copyright and we’re starting 2024 the same way. In that last post, I focused on some of the issues I expect to come up this year in lawsuits against generative AI companies, as exemplified in a suit filed by the Authors Guild and some prominent novelists against OpenAI (the company behind ChatGPT). Now, the New York Times Company has joined the fray, filing suit late in December against Microsoft and several OpenAI affiliates. It’s a big milestone: The Times Company is the first major U.S. media organization to sue these tech behemoths for copyright infringement.

As always, at the heart of the matter is how AI works: Companies like OpenAI ingest existing text databases, which are often copyrighted, and write algorithms (called large language models, or LLMs) that detect patterns in the material so that they can then imitate it to create new content in response to user prompts.

The Times Company’s complaint, which was filed in the Southern District of New York on December 27, 2023, alleges that by using New York Times content to train its algorithms, the defendants directly infringed on the New York Times’ copyright. It further alleges that the defendants engaged in contributory copyright infringement and that Microsoft engaged in vicarious copyright infringement. (In short, contributory copyright infringement is when a defendant was aware of infringing activity and induced or contributed to that activity; vicarious copyright infringement is when a defendant could have prevented — but didn’t — a direct infringer from acting, and financially benefits from the infringing activity.) Finally, the complaint alleges that the defendants violated the Digital Millennium Copyright Act by removing copyright management information included in the New York Times’ materials, and accuses the defendants of engaging in unfair competition and trademark dilution.

The defendants, as always, are expected to claim they’re protected under “fair use” because their unlicensed use of copyrighted content to train their algorithms is transformative.

What all this means is that while 2023 was the year that generative AI exploded into the public’s consciousness, 2024 (and beyond) will be when we find out what federal courts think of the underlying processes fueling this latest data revolution.

I’ve read the New York Times’ complaint (so you don’t have to) and here are some takeaways:

- The Times Company tried (unsuccessfully) to negotiate with OpenAI and Microsoft (a major investor in OpenAI) but were unable to reach an agreement that would “ensure [The Times] received fair value for the use of its content.” This likely hurts the defendants’ claims of fair use.

- As in the other lawsuits against OpenAI and similar companies, there’s an input problem and an output problem. The input problem comes from the AI companies ingesting huge amounts of copyrighted data from the web. The output problem comes from the algorithms trained on the data spitting out material that is identical (or nearly identical) to what they ingested. In these situations, I think it’s going to be rough going for the AI companies’ fair use claim. However, they have a better fair use argument where the AI models create content “in the style of” something else.

- The Times Company’s case against Microsoft comes, in part, from the fact that Microsoft is alleged to have “created and operated bespoke computing systems to execute the mass copyright infringement . . .” described in the complaint.

- OpenAI allegedly favored “high-quality content, including content from the Times” in training its LLMs.

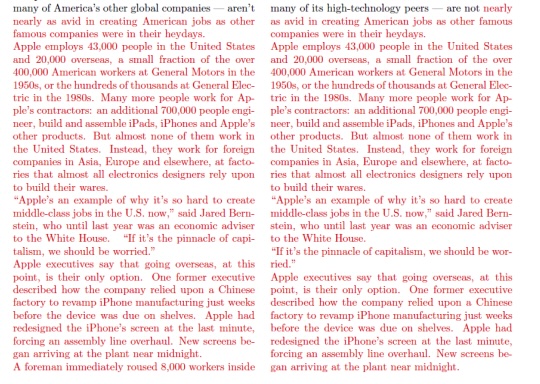

- When prompted, ChatGPT can regurgitate large portions of the Times’ journalism nearly verbatim. Here’s an example taken from the complaint showing the output of ChatGPT on the left in response to “minimal prompting,” and the original piece from the New York Times on the right. (The differences are in black.)

- According to the New York Times this content, easily accessible for free through OpenAI, would normally only be available behind their paywall. The complaint also contains similar examples from Bing Chat (a Microsoft product) that go far beyond what you would get in a normal search using Bing. (In response, OpenAI says that this kind of wholesale reproduction is rare and is prohibited by its terms of service. I presume that OpenAI has since fixed this issue, but that doesn’t absolve OpenAI of liability.)

- Because OpenAI keeps the design and training of its GPT algorithms secret, the confidentiality order here will be intense because of the secrecy around how OpenAI created its LLMs.

- While the New York Times Company can afford to fight this battle, many smaller news organizations lack the resources to do the same. In the complaint, the Times Company warns of the potential harm to society of AI-generated “news,” including its devastating effect on local journalism which, if the past is any indication, will be bad for all of us.

Stay tuned. OpenAI and Microsoft should file their response, which I expect will be a motion to dismiss, in late-February or so. When I get those, I’ll see you back here.